A bit more than two years ago, I wrote a post laying out some of the evidence I had seen showing that people were submitting fraudulent responses on MTurk. Since that time, at least two research teams have conducted far more systematic investigations than what I originally did. This paper has been published at Political Science Research and Methods. I suspect that this one is not far behind.

These papers arrive at basically the same conclusion. In brief: There is fraudulent responding on MTurk. In large part, it comes from non-US respondents who use VPNs to circumvent location requirements. These respondents are mostly clicking through surveys as fast as possible, probably paying hardly any attention to anything. As a result, they degrade data quality considerably, attenuating treatment effects by perhaps 10-30%. These papers also give some good news: you can use free web services to identify and filter out these responses on the basis of suspicious IP addresses—either at the recruitment stage, or after you did your study.

The current situation

Those are valuable contributions and the authors have done the research community a service. At the same time, I’m writing this post because I saw some things that make me think that they paint an incomplete picture of what is going on, and that we have more work to do. I’ll get to the details in a second, but let me put the essentials up-front:

- Is there still fraud on MTurk?: Yes.

- Can you use attention-check questions to screen these responses out?: I wouldn’t count on it. Most attention checks are closed-ended, and I see suspicious responders that appear to be quite adept at passing closed-ended attention checks.

- Does screening out suspicious IP addresses solve problem?: It probably helps, but much of the problem remains.

- Is there anything else that can be done?: I think looking at responses to open-ended questions is a promising avenue for detecting fraud.

A fresh study

Ok so let’s get into it. Yanna Krupnikov and I ran a study last week. This is actually the same project that led to my post two years ago, wherein we got majorly burned by fraudulent responding. So we were quite careful this time. We included a bunch of closed-ended attention check questions. We also included one open-ended attention check (of sorts), and an open-ended debriefing question. And, we fielded the study via CloudResearch (a.k.a. TurkPrime), which is a platform that facilitates MTurk studies. CloudResearch is quite aware of MTurk data integrity problems, and has been at the forefront of combating them (e.g. here). We used the following CloudResearch settings:

- We blocked duplicate IP addresses.

- We blocked IPs with suspicious geocode locations.

- We used the option to verify worker country location.

- We blocked participants that CloudResearch has flagged as being low-quality. This is the default setting on CloudResearch, though note that CloudResearch also offers a more restrictive option, which is to only recruits participants who have been actively vetted and approved by them. (See here for more details.) I cannot say how things might be different if we had chosen that option. [Edit 8/30/21: My subsequent experiences with pre-approved respondents has been very positive. They appear to perform very well in attention checks, and do not show the signs of fraud described herein. See here for a working paper by CR researchers providing a more detailed look at their pre-approved list.]

- We did not set a minimum HIT approval rate. When you do so, CloudResearch limits recruitment to MTurkers who have completed at least 100 HITs in the past. So, we were concerned that setting such a minimum would limit recruitment to “professional respondents,” which could bear on our results. I do not think this makes too big a difference as concerns fraud—Turkers tend to have high approval ratings and I have previously seen fraudulent responding even when the approval rating set above 95%—but I cannot be sure. We have another study coming up and will hopefully be able to say more then.

An aside on CloudResearch: I was really impressed with the functionality of their platform and the work they have done to ensure data integrity. This post should not be construed as a slight against their efforts. As I say, it might be the case that the more restrictive option I mention above solves all the problems I describe here, albeit at the cost of a smaller participant pool. (Someone should try it and let us know!) But plenty of people do work on MTurk not through CloudResearch, so these issues merit discussion in any event.

Let’s get to the open-ended attention check I mention above. Our motivation in including this was that fraudulent responses appeared to have problems with these in the past (see my old post). We asked them, “For you, what is the most important meal of the day, and why? Please write one sentence.” We didn’t actually care what these respondents found to be the most important meal of the day. We just wanted a not-too-onerous way to confirm that they could read an English sentence and write something coherent in response.

By and large, they could. The vast majority of our respondents wrote sensible things, like “I think breakfast is the most important meal of the day as it gives a fresh start to a new day” or “Dinner is the most important meal of the day because I need to be full to be able to sleep later.”

Very nice and good

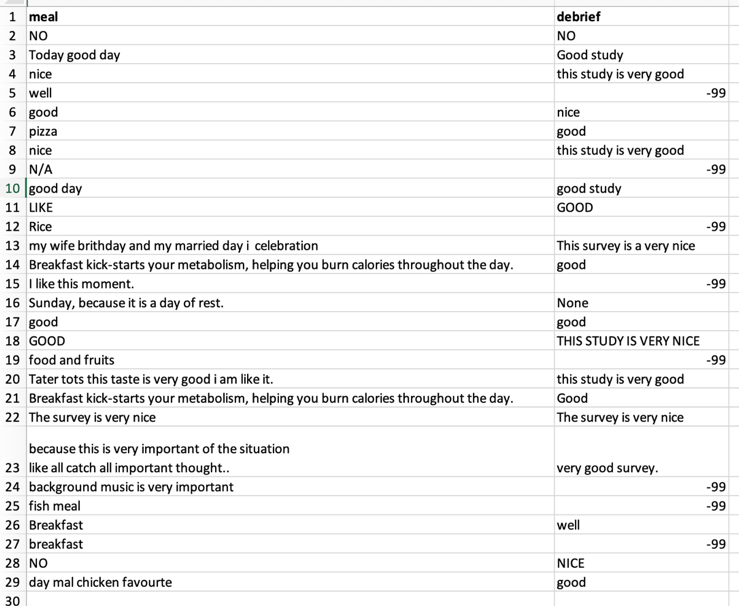

But several did not. Skimming through the responses, I spotted 28 that looked suspicious to me. Our survey had 300 responses, so this is nearly 10%. The suspicious responses appear below. The second column you can see here is the open-ended debriefing question. I’ll refer to these 28 rows in the dataset as the “suspicious responders.”

Some remarks. First, these look very much like the sort of thing we were contending with two years ago. In particular, various permutations of the words “good” and “nice” in the debriefing field are very common. We also see some people who misunderstood the question. (“Sunday, because it is a day of rest.”)

The responses on rows 14 and 21 merit particular attention. They are exactly the same (and plagiarized from this website). Moreover, these two responses were entered contemporaneously, as if the same person had our survey instrument open in two separate windows and was filling out the survey twice, simultaneously.

Traditional attention checks

How did the people I flagged as having suspicious open-endeds do on our closed-ended attention check questions? They did quite well!:

- We presented participants with a picture of an eggplant, and asked them to identify it, from the options “eggplant,” “aubergine,” “squash,” “brinjal.” (This question has been used in past work to identify people who are probably not in the U.S. Eggplants are called aubergine in the UK and elsewhere, and brinjal is the Indian word for eggplant.) All 28 of the suspicious responders chose the correct option (eggplant).

- We asked them, “For our research, careful attention to survey questions is critical! To show that you are paying attention please select ‘I have a question.’” The response options were “I understand,” “I do not understand,” and “I have a question.” 27 suspicious responders chose the correct answer, and one skipped the question.

- We asked respondents, “People are very busy these days and many do not have time to follow what goes on in the government. We are testing whether people read questions. To show that you’ve read this much, answer both ‘extremely interested’ and ‘very interested.’ ” There were five response options, ranging from “not interested at all” to “extremely interested.” 26 suspicious responders passed this check, choosing the two correct options.

- We showed respondents a calendar with the date of October 11, 2019 circled, and asked them to write that date. (Technically I suppose this is an open-ended question, though a more restrictive one than the “favorite meal” question.) This question comes from past work confirming that non-US participants tend to swap the month and date, relative to norms in the U.S. So they’d write “11/10/19” rather than “10/11/19”. 17 suspicious responders passed this question, which is 60.7%. In comparison, 91% of non-suspicious responders passed. Of the 11 suspicious responders who did not pass, 6 wrote “11/10/19” and 5 wrote various other seemingly-arbitrary dates.

We can look at one more thing. CloudResearch asks MTurk workers demographic questions after research studies, and it keeps track of whether respondents answer a question about gender in a consistent way. (Repeatedly-changing gender responses would be adduced as evidence that a person is answering randomly.)

For the respondents in our study, 167 (55%) had perfect consistency. 131 (44%) had missing values on this measure. (I assume people new to CloudResearch?) Only 3 (1%) had other scores: 90% consistency, 80% consistency, or 63.64% consistency. We can’t link these consistency scores to individual responses, so I don’t know whether the suspicious responders are the people with missing consistency scores.

Using IP Addresses to Detect Fraud

How about suspicious IPs? As I note above, the recent work on this issue recommends checking respondent IP addresses against public databases of suspicious IPs. We used the CloudResearch option to block as many of these as possible before entering the survey, but maybe we could detect others ex post.

I used four separate IP checkers to assess whether these responses are valid. Here is what they showed:

- IPHub, which is recommended by the Kennedy et al. PSRM piece as being the best service, flagged 1 of the 28 responses as suspicious. (It flagged 8 respondents with seemingly-fine open-ended responses as suspicious, but that’s another story.)

- IPIntel flagged 5 out of 28 as being suspicious.

- Proxycheck.io flagged 1 out of 28 as being suspicious (the same one as IPHub).

- IPVoid flagged 5 out of 28 as being questionable, and an additional 2 as being suspicious.

So, the IP checkers identified some questionable responses, but they would let most of them through. Consider this: If we went to extreme lengths and ran all four IP checkers, excluding a response if it is flagged by any of the four, we would only flag 11 responses total—less than half of those with suspicious open-endeds. (And if we did that, we would also need to evaluate the false-positive difficulty. These checkers might also flag responses that are actually fine. I am not looking at that here.)

Of course, as I write above, we invoked several CloudResearch tools that might have filtered out a bunch of problematic IP addresses before they even entered our survey. Maybe the IP-checking tools struggle here due to survivorship effects: only the especially wily fraudsters remain. But even if this is the case, these still represent about 10% of our data—easily enough to matter.

Is this really fraud?

Maybe I’m making something out of nothing here. If these folks pass four attention-check questions, consistently report the same gender, and have non-suspicious IP addresses, maybe they’re really ok and we should just consider them to be valid responses.

Maybe, but I’m skeptical. Not being able to write a simple sentence about what meal is most important to you seems, to me, like per se evidence that a respondent is not mentally invested to a degree that most studies require. Furthermore, the patterns in the open-endeds I display above are facially suspicious. The repeated invocation of “nice,” “good,” “very good,” “very nice,” etc. seem to reflect that these responses share a common origin, and are not simply getting a little lazy at the end of a survey instrument. And of course, I mention one clear-cut case of maliciousness above (plagiarizing from an external website).

And there’s a little more: we see some tentative evidence that these 28 suspicious responders behaved differently in the main part of our study. (28 is a small comparison group, so I will only describe general patterns.) Our study was a simple instrumentation check: a within-subjects design that involved viewing both a high-quality and a low-quality campaign advertisement (in a random order), and rating them. Among most respondents, the effects were huge (meaning our instrumentation did what we expected). But the suspicious responders rated both ads nearly the same—seemingly oblivious to obvious differences. They also appeared to favor the high ends of our various rating scales, compared to the non-suspicious responders.

If you asked me for my mental picture of what is going on, I suppose at this point I simply think there is a person or people—I don’t know whether they are in the US or not—who have come up with an apparatus to complete MTurk surveys in high volume. They might pay a modicum of attention—probably just enough to pass attention checks that multiple researchers use, which might by now be familiar. But probably not enough attention to count as a valid response in survey research. I suspect free-response, open-ended questions are fairly effective at identifying these respondents because of the effort required to answer them.

I encourage other researchers doing studies on MTurk to experiment with including other free response questions that might be revealing here. There is no need to re-use my “favorite meal” question. The fraudsters might adapt to it. Try asking respondents to write one sentence about a hobby they enjoy, about a musical performance they remember, or what are they main ways they consume caffeine (if they do). And please include a simple “Please use this box to report any comments on the survey” question, as suspicious responders appear especially prone to writing “good” and “nice” in response to such questions. It would also be helpful to have data points from people who recently asked open-ended questions and set MTurk approval ratings to be quite high.

Be nice to MTurk

A closing remark. Please do not use this information to besmirch MTurk as a resource for conducting survey research. Remember that about 90% of the responses we received look fine. Indeed, they appear to be higher in attention and quality than what I am accustomed to seeing on non-convenience samples. This is not a categorical problem with MTurk as a data source (and it drives me bananas when reviewers dismiss MTurk out of hand). It is a specific issue with an otherwise great resource, and our studies will be better if we solve it.